How To Create Data Lake

Data lakes provide a complete and authoritative data

store that can power data analytics, business intelligence,

and machine learning

Building a lakehouse with Delta Lake

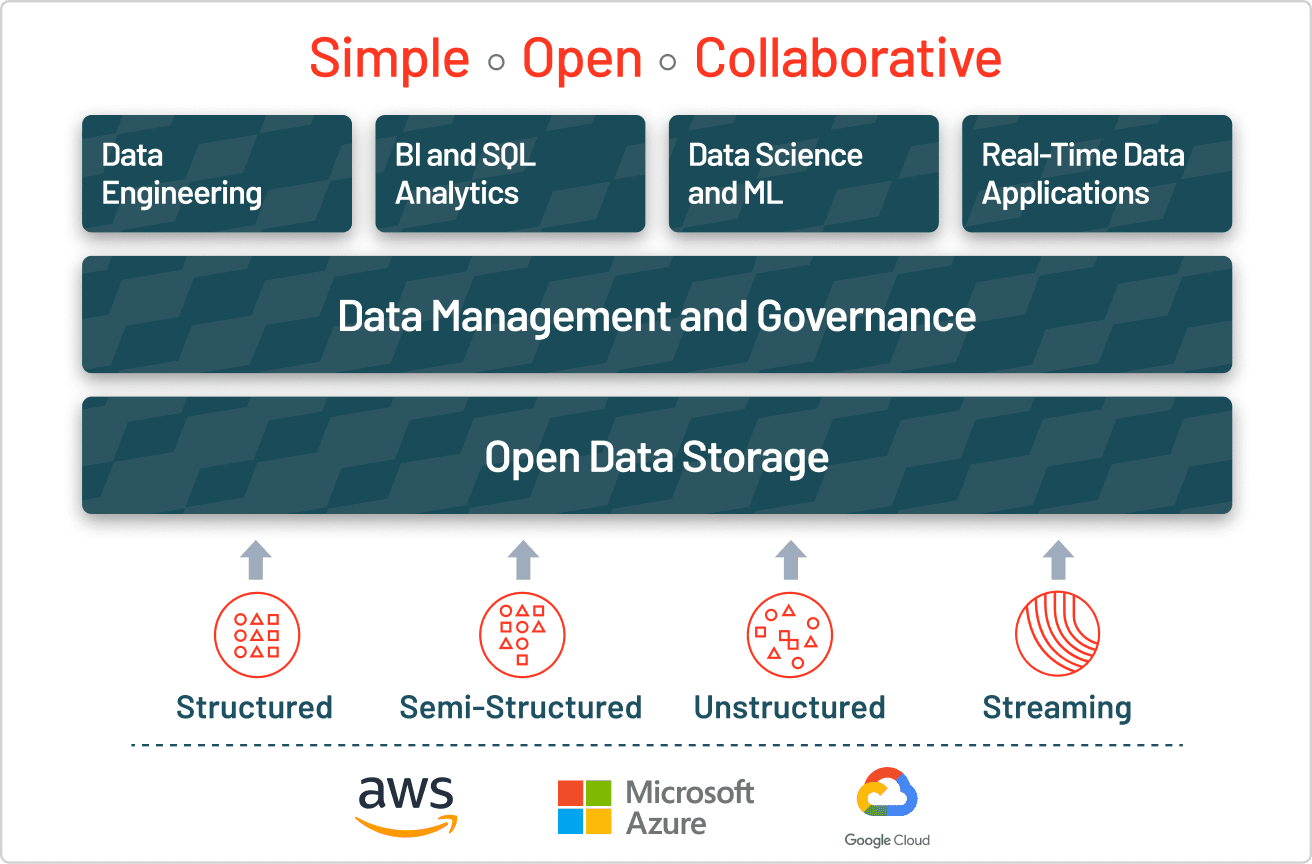

To build a successful lakehouse, organizations have turned to Delta Lake, an open format data management and governance layer that combines the best of both data lakes and data warehouses. Across industries, enterprises are leveraging Delta Lake to power collaboration by providing a reliable, single source of truth. By delivering quality, reliability, security and performance on your data lake — for both streaming and batch operations — Delta Lake eliminates data silos and makes analytics accessible across the enterprise. With Delta Lake, customers can build a cost-efficient, highly scalable lakehouse that eliminates data silos and provides self-serving analytics to end-users.

Data lakes vs. Data lakehouses vs. Data warehouses

-

#

Types of data

Cost

Format

Scalability

Intended users

Reliability

Ease of use

Performance

-

Data lake

All types: Structured data, semi-structured data, unstructured (raw) data

$

Open format

Scales to hold any amount of data at low cost, regardless of type

Limited: Data scientists

Low quality, data swamp

Difficult: Exploring large amounts of raw data can be difficult without tools to organize and catalog the data

Poor

-

Data lakehouse

All types: Structured data, semi-structured data, unstructured (raw) data

$

Open format

Scales to hold any amount of data at low cost, regardless of type

Unified: Data analysts, data scientists, machine learning engineers

High quality, reliable data

Simple: Provides simplicity and structure of a data warehouse with the broader use cases of a data lake

High

-

Data warehouse

Structured data only

$$$

Closed, proprietary format

Scaling up becomes exponentially more expensive due to vendor costs

Limited: Data analysts

High quality, reliable data

Simple: Structure of a data warehouse enables users to quickly and easily access data for reporting and analytics

High

Lakehouse best practices

Use the data lake as a landing zone for all of your data

Save all of your data into your data lake without transforming or aggregating it to preserve it for machine learning and data lineage purposes.

Mask data containing private information before it enters your data lake

Personally identifiable information (PII) must be pseudonymized in order to comply with GDPR and to ensure that it can be saved indefinitely

![]()

Secure your data lake with role- and view-based access controls

Adding view-based ACLs (access control levels) enables more precise tuning and control over the security of your data lake than role-based controls alone.

Build reliability and performance into your data lake by using Delta Lake

The nature of big data has made it difficult to offer the same level of reliability and performance available with databases until now. Delta Lake brings these important features to data lakes.

![]()

Catalog the data in your data lake

Use data catalog and metadata management tools at the point of ingestion to enable self-service data science and analytics

![]()

Shell has been undergoing a digital transformation as part of our ambition to deliver more and cleaner energy solutions. As part of this, we have been investing heavily in our data lake architecture. Our ambition has been to enable our data teams to rapidly query our massive data sets in the simplest possible way. The ability to execute rapid queries on petabyte scale data sets using standard BI tools is a game changer for us.

—Dan Jeavons, GM Data Science, Shell

Read the full story →

Resources

Delta Lake

Webinars

Documentation

How To Create Data Lake

Source: https://databricks.com/discover/data-lakes/introduction

Posted by: cundiffthaveling73.blogspot.com

0 Response to "How To Create Data Lake"

Post a Comment